42 soft labels deep learning

PDF MixNN: Combating Noisy Labels in Deep Learning by Mixing ... the noisy labels during training, resulting in poor performance. During a "early learning" phase, deep neural networks were ob-served to fit the clean samples before memorizing the mislabeled samples. In this paper, we dig deeper into the representation distributions in the early learning phase and discover that, GitHub - gorkemalgan/deep_learning_with_noisy_labels ... List of papers that shed light to label noise phenomenon for deep learning: List of works under label noise beside classification Sources on web Noisy-Labels-Problem-Collection Learning-with-Label-Noise Clothing1M is a real-world noisy labeled dataset which is widely used for benchmarking. Below is the test accuracies on this dataset.

Data Labeling Software: Best Tools For Data Labeling in ... Playment is a multi-featured data labeling platform that offers customized and secure workflows to build high-quality training datasets with ML-assisted tools and sophisticated project management software. It offers annotations for various use cases, such as image annotation, video annotation, and sensor fusion annotation.

Soft labels deep learning

Label smoothing with Keras, TensorFlow, and Deep Learning ... This type of label assignment is called soft label assignment. Unlike hard label assignments where class labels are binary (i.e., positive for one class and a negative example for all other classes), soft label assignment allows: The positive class to have the largest probability While all other classes have a very small probability GitHub - subeeshvasu/Awesome-Learning-with-Label-Noise: A ... 2017-Arxiv - Deep Learning is Robust to Massive Label Noise. [Paper] 2017-Arxiv - Fidelity-weighted learning. [Paper] 2017 - Self-Error-Correcting Convolutional Neural Network for Learning with Noisy Labels. [Paper] 2017-Arxiv - Learning with confident examples: Rank pruning for robust classification with noisy labels. [Paper] [Code] Label Smoothing: An ingredient of higher model accuracy ... Your labels would be 0 — cat, 1 — not cat. Now, say you label_smoothing = 0.2 Using the equation above, we get: new_onehot_labels = [0 1] * (1 — 0.2) + 0.2 / 2 = [0 1]* (0.8) + 0.1 new_onehot_labels = [0.9 0.1] These are soft labels, instead of hard labels, that is 0 and 1.

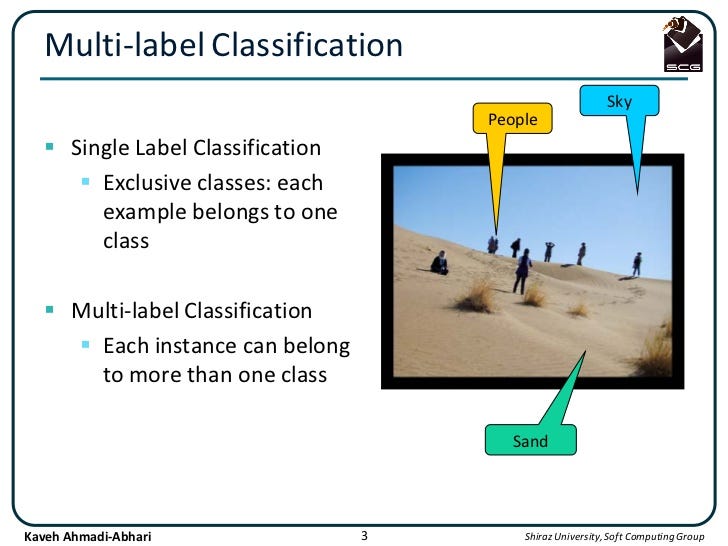

Soft labels deep learning. comparison - What is the definition of "soft label" and ... A soft label is one which has a score (probability or likelihood) attached to it. So the element is a member of the class in question with probability/likelihood score of eg 0.7; this implies that an element can be a member of multiple classes (presumably with different membership scores), which is usually not possible with hard labels. (PDF) Deep learning with noisy labels: Exploring ... Label noise is a common feature of medical image datasets. Left: The major sources of label noise include inter-observ er variability, human annotator' s error, and errors in computer-generated... An Introduction to Confident Learning: Finding and ... In this post, I discuss an emerging, principled framework to identify label errors, characterize label noise, and learn with noisy labels known as confident learning (CL), open-sourced as the cleanlab Python package. cleanlab is a framework for machine learning and deep learning with label errors like how PyTorch is a PDF Unsupervised Person Re-Identification by Soft Multilabel ... To overcome this problem, we propose a deep model for the soft multilabel learning for unsupervised RE-ID. The idea is to learn a soft multilabel (real-valued label likeli- hood vector) for each unlabeled person by comparing the unlabeled person with a set of knownreferencepersons from an auxiliary domain.

Unsupervised deep hashing through learning soft pseudo ... We design a deep auto-encoder network SPLNet, which can automatically learn soft pseudo-labels and generate a local semantic similarity matrix. The soft pseudo-labels represent the global similarity between inter-cluster RS images, and the local semantic similarity matrix describes the local proximity between intra-cluster RS images. 3. Label Smoothing Explained - Papers With Code Label Smoothing is a regularization technique that introduces noise for the labels. This accounts for the fact that datasets may have mistakes in them, so maximizing the likelihood of log. p ( y ∣ x) directly can be harmful. Assume for a small constant ϵ, the training set label y is correct with probability 1 − ϵ and incorrect otherwise. [2008.00627] Learning to Purify Noisy Labels via Meta Soft ... By viewing the label correction procedure as a meta-process and using a meta-learner to automatically correct labels, we could adaptively obtain rectified soft labels iteratively according to current training problems without manually preset hyper-parameters. MetaLabelNet: Learning to Generate Soft-Labels from Noisy ... Soft-labels are generated from extracted features of data instances, and the mapping function is learned by a single layer perceptron (SLP) network, which is called MetaLabelNet. Following, base classifier is trained by using these generated soft-labels. These iterations are repeated for each batch of training data.

Loss and Loss Functions for Training Deep Learning Neural ... Almost universally, deep learning neural networks are trained under the framework of maximum likelihood using cross-entropy as the loss function. Most modern neural networks are trained using maximum likelihood. This means that the cost function is […] described as the cross-entropy between the training data and the model distribution. Meta Soft Label Generation for Noisy Labels The existence of noisy labels in the dataset causes significant performance degradation for deep neural networks (DNNs). To address this problem, we propose a Meta Soft Label Generation algorithm called MSLG, which can jointly generate soft labels using meta-learning techniques and learn DNN parameters in an end-to-end fashion. Our approach adapts the meta-learning paradigm to estimate optimal ... Understanding Deep Learning on Controlled Noisy Labels In "Beyond Synthetic Noise: Deep Learning on Controlled Noisy Labels", published at ICML 2020, we make three contributions towards better understanding deep learning on non-synthetic noisy labels. First, we establish the first controlled dataset and benchmark of realistic, real-world label noise sourced from the web (i.e., web label noise ... [2007.05836] Meta Soft Label Generation for Noisy Labels generate soft labels using meta-learning techniques and learn DNN parameters in an end-to-end fashion. Our approach adapts the meta-learning paradigm to estimate optimal label distribution by checking gradient directions on both noisy training data and noise-free meta-data. In order to iteratively update

Deep Learning Series - Session 2: Automated and Iterative Labeling for Images and Signals Video ...

Semi-Supervised Learning With Label Propagation Nodes in the graph then have label soft labels or label distribution based on the labels or label distributions of examples connected nearby in the graph. Many semi-supervised learning algorithms rely on the geometry of the data induced by both labeled and unlabeled examples to improve on supervised methods that use only the labeled data.

Label-Free Quantification You Can Count On: A Deep ... We ran an experiment to see if we can get the same result when we analyze unstained brightfield images (Figure 1, left) with our cellSens deep learning module. Figure 1: Brightfield image (left) and fluorescent image (right) of cell nuclei. To train the software, we provided fluorescence and brightfield images of 40 positions in a 96-well plate.

Learning Soft Labels via Meta Learning - Apple Machine ... Learning Soft Labels via Meta Learning View publication Copy Bibtex One-hot labels do not represent soft decision boundaries among concepts, and hence, models trained on them are prone to overfitting. Using soft labels as targets provide regularization, but different soft labels might be optimal at different stages of optimization.

Understanding Dice Loss for Crisp Boundary Detection | by ... In deep learning and computer vision, people are working hard on feature extraction to output meaningful representations for various kinds of vision tasks. In some tasks, we only focus on geometry ...

Deep Learning from Noisy Image Labels with Quality ... Specially, it consists of two important layers: (1) the contrastive layer estimates the quality variable in the embedding space to reduce noise effect; (2) the additive layer aggregates prior predictions and noisy labels as posterior to train the classifier.

How to make use of "soft" labels in binary classification ... If you choose soft prediction, the output of the model would look like: [0.9, 0.1]; and the output from hard prediction would be "0" (the index) or "fraud". The soft prediction gives you more information about the model's confidence in prediction. The higher the value for the predicted class, the more confident and accurate (in general) the

A Soft-Labeled Self-Training Approach - CNRS by A Mey · Cited by 8 — The idea is to use the supervised trained classifier to label the unlabeled points and to enlarge this way the training data. This paper aims to show that a ...6 pages

What is Label Smoothing?. A technique to make your model ... Label smoothing is a regularization technique that addresses both problems. Overconfidence and Calibration A classification model is calibrated if its predicted probabilities of outcomes reflect their accuracy. For example, consider 100 examples within our dataset, each with predicted probability 0.9 by our model.

Labelling Images - 15 Best Annotation Tools in 2022 Label Coverage. The next important thing is to see how many labels are present for you to use. For example, for on-device users, there are around 400 or more labels present, which are of common things and most commonly used, but for cloud users, there are more than 10,000 labels belonging to multiple different categories. Specific entity IDs

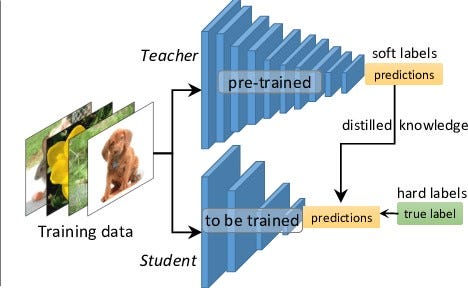

Knowledge distillation in deep learning and its ... Soft labels refers to the output of the teacher model. In case of classification tasks, the soft labels represent the probability distribution among the classes for an input sample. The second category, on the other hand, considers works that distill knowledge from other parts of the teacher model, optionally including the soft labels.

(PDF) Learning from Noisy Labels with Deep Neural Networks ... Learning from Noisy Labels with Deep Neural Networks: A Surve y Hwanjun Song, Minseok Kim, Dongmin Park, Jae-Gil Lee Abstract —Deep learning has achieved remarkable success in numerous domains with...

A semi-supervised learning approach for soft labeled data Abstract: In some machine learning applications using soft labels is more useful and informative than crisp labels. Soft labels indicate the degree of ...INSPEC Accession Number: 11792484Date Added to IEEE Xplore: 13 January 2011Print ISBN: 978-1-4244-8134-7DOI: 10.1109/ISDA.2010.5687034

Label Smoothing: An ingredient of higher model accuracy ... Your labels would be 0 — cat, 1 — not cat. Now, say you label_smoothing = 0.2 Using the equation above, we get: new_onehot_labels = [0 1] * (1 — 0.2) + 0.2 / 2 = [0 1]* (0.8) + 0.1 new_onehot_labels = [0.9 0.1] These are soft labels, instead of hard labels, that is 0 and 1.

GitHub - subeeshvasu/Awesome-Learning-with-Label-Noise: A ... 2017-Arxiv - Deep Learning is Robust to Massive Label Noise. [Paper] 2017-Arxiv - Fidelity-weighted learning. [Paper] 2017 - Self-Error-Correcting Convolutional Neural Network for Learning with Noisy Labels. [Paper] 2017-Arxiv - Learning with confident examples: Rank pruning for robust classification with noisy labels. [Paper] [Code]

Label smoothing with Keras, TensorFlow, and Deep Learning ... This type of label assignment is called soft label assignment. Unlike hard label assignments where class labels are binary (i.e., positive for one class and a negative example for all other classes), soft label assignment allows: The positive class to have the largest probability While all other classes have a very small probability

Post a Comment for "42 soft labels deep learning"